What you get with n8n over half a decade

With the recent Fireship video about n8n, I feel that there will be a new wave of people trying out my favorite automation tool. I used n8n since 2021, long before the AI boom, so consider this retrospective a collection of tips and tricks from a long-time user and huge fan.

My writing on n8n over the years:

- 2021 Favorite things (2021 December)

- The joy of making small tools (2025 March)

- No Algo, a feed to beat doom scrolling with a bit of AI (2025 September)

Setting expectations

I need to set some expectations for readers. If you're not comfortable with the basics of the web ecosystem and various tools, adopting n8n can be a tough journey. Without technical knowledge of how these tools and services operate, it's hard to use them efficiently or secure them properly. I've came up with two litmus tests as a self-assessment:

LITMUS TEST ONE : Generally, we should accept a user's authentication token via

1. query parameter

2. request header

The generally accepted answer is via a request header. With HTTPS, headers are always encrypted in transit and much harder to spoof. If your answer wasn't an immediate and confident "Option Two!", I strongly advise to proceed with caution.

LITMUS TEST TWO: Which statement is true?

1. Webhooks are a subset of RESTful APIs

2. Webhooks are not RESTful APIs

This one is more nuance. RESTful APIs follow an architecture pattern that uses HTTP standards to describe and provide resources. That definition does not fit webhooks well. Webhooks are just a language agnostic way for systems to send messages to each other. Sure, both uses HTTP methods to send information, but that by itself is not a sufficient enough qualifier for either of them, in my opinion.

What n8n doesn't give you

In case I haven't been enough of a downer yet, let's start with what n8n doesn't do for you.

- n8n is essentially a glue service. You need to run your own services beside it. This means no persistent data in workflows, you'll need to setup and manage (or use managed instances) of all your dependencies like vector databases, relational databases, Redis instances all on our own. n8n provides excellent connecters for these services, but you run and maintain them on your own.

- The community version does not have granular access control. Since I am using it for personal automations and I run it in my homelab behind a VPN with minimal public access, I am not bothered by it.

Update on 2025-10-01: n8n recently announced a native data table as a beta in 1.113.3. But I still think its a good idea to persist your data outside of n8n due to vendor lock-in reasons. I've been using a local Redis instance to persist data between flows, but more recently I packaged and deployed a PocketBase instance to serve some simple data-table needs.What do I actually use n8n for?

As I have written in The joy of..., n8n is the perfect glue between services. If you want to dig deeper into my workflows, drop me a message on LinkedIn. No guarantees that I will reply tho. But without further ado, here are my usecases as of the time of writing.

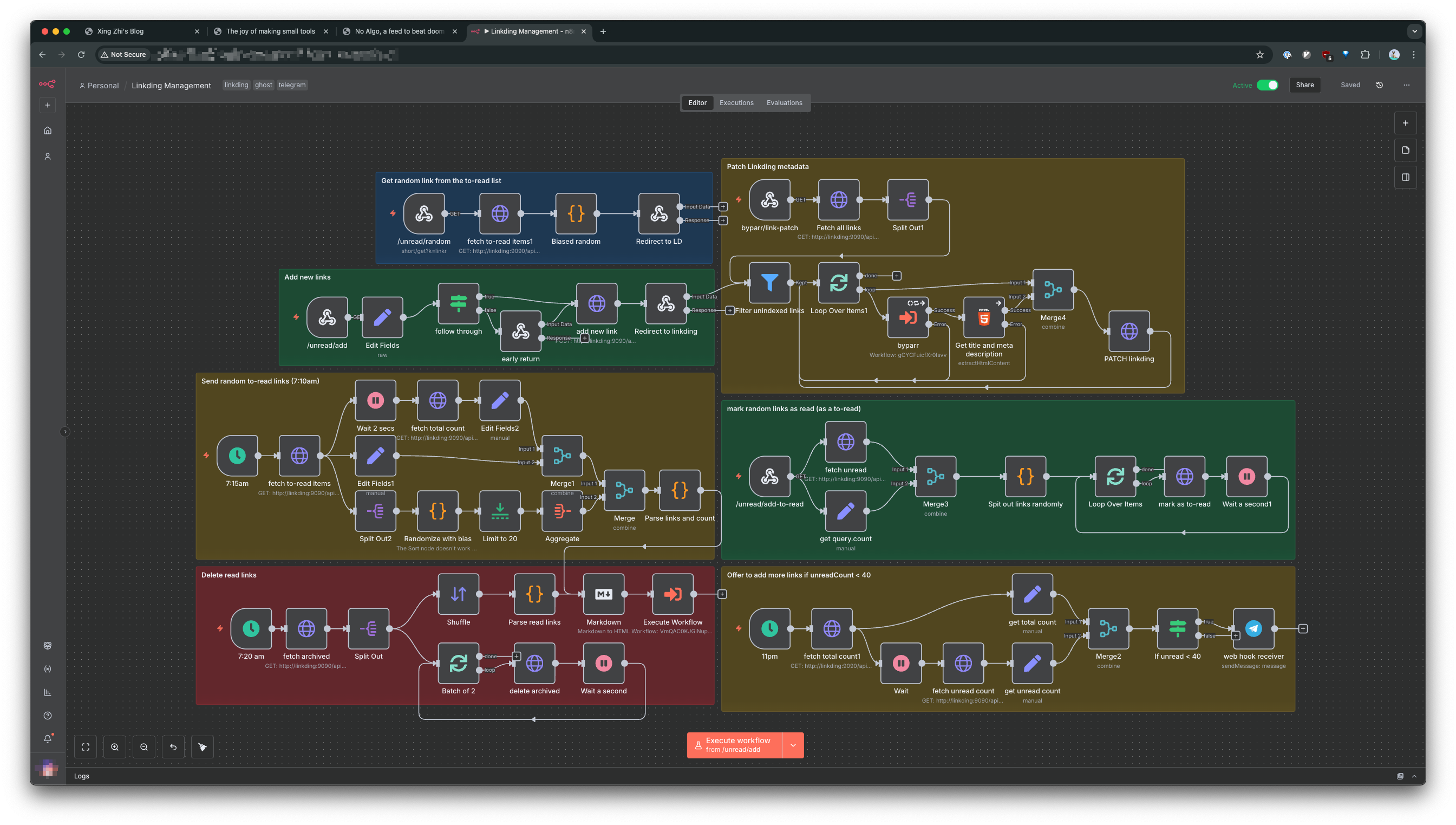

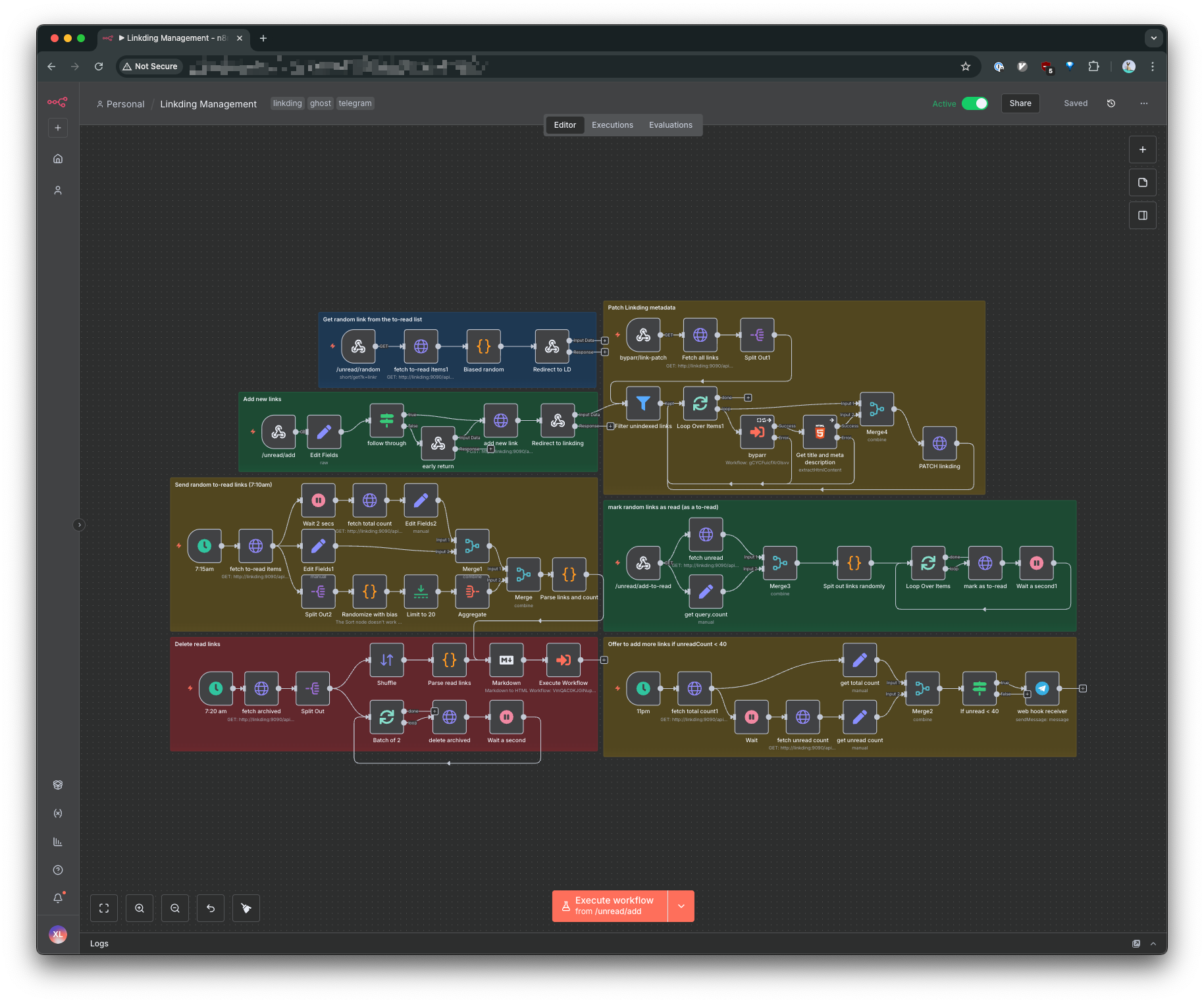

- Nightly cron job to delete read links from my self-hosted linkding instance

- Exposing a GET endpoint to quickly insert links into my linkding instance with tags and other metadata

- the alternative is to use the UI to copy/paste links everytime, or use Apple Shortcuts to do a POST request

- Using byparr to work around captchas

- Using byparr to get site metadata to patch my linkding entries that are behind captchas

- some sites are protected by captchas and linkding fails to get the metadata or even the title on its own. I use n8n to get those content behind captchas and patch it back.

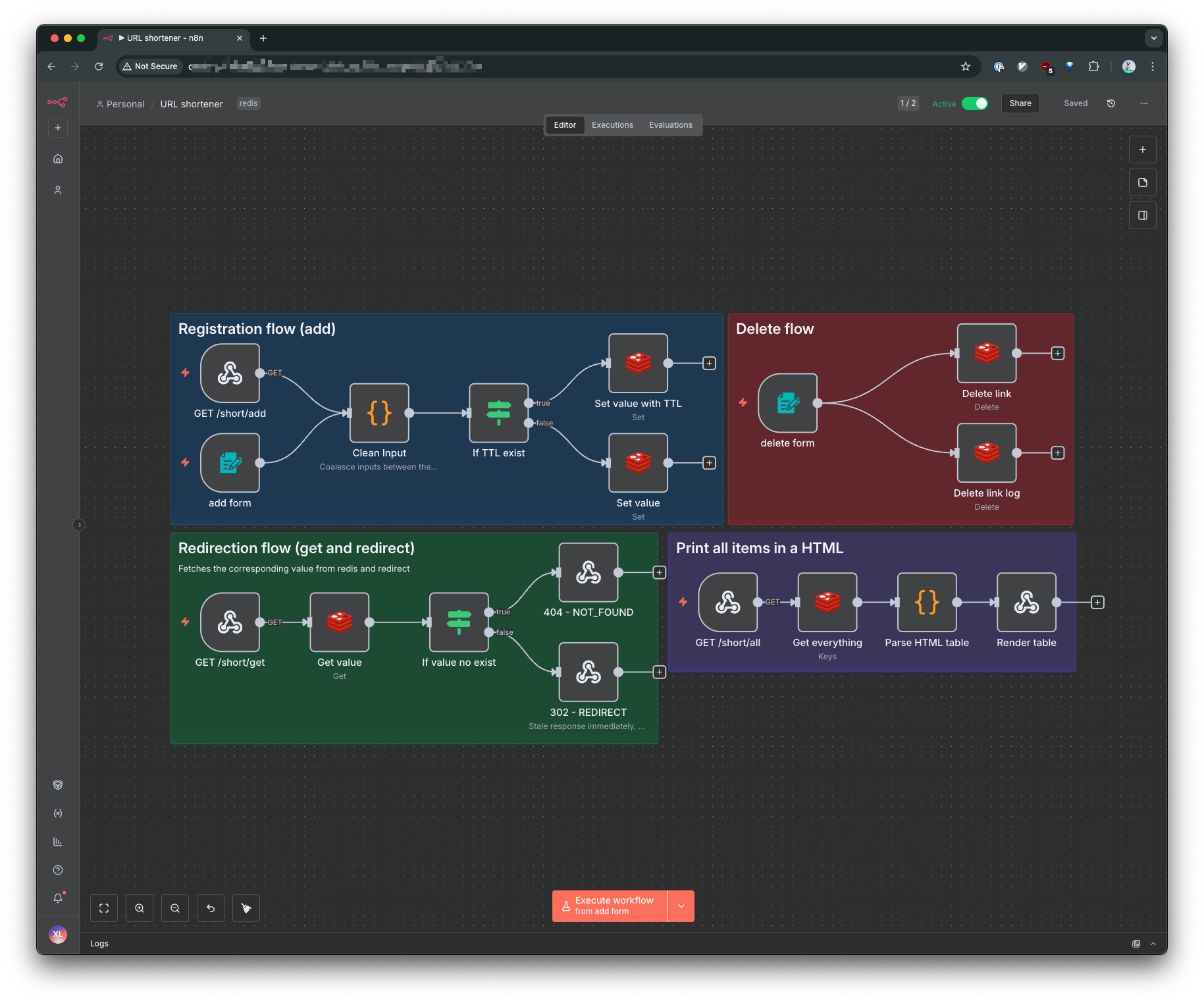

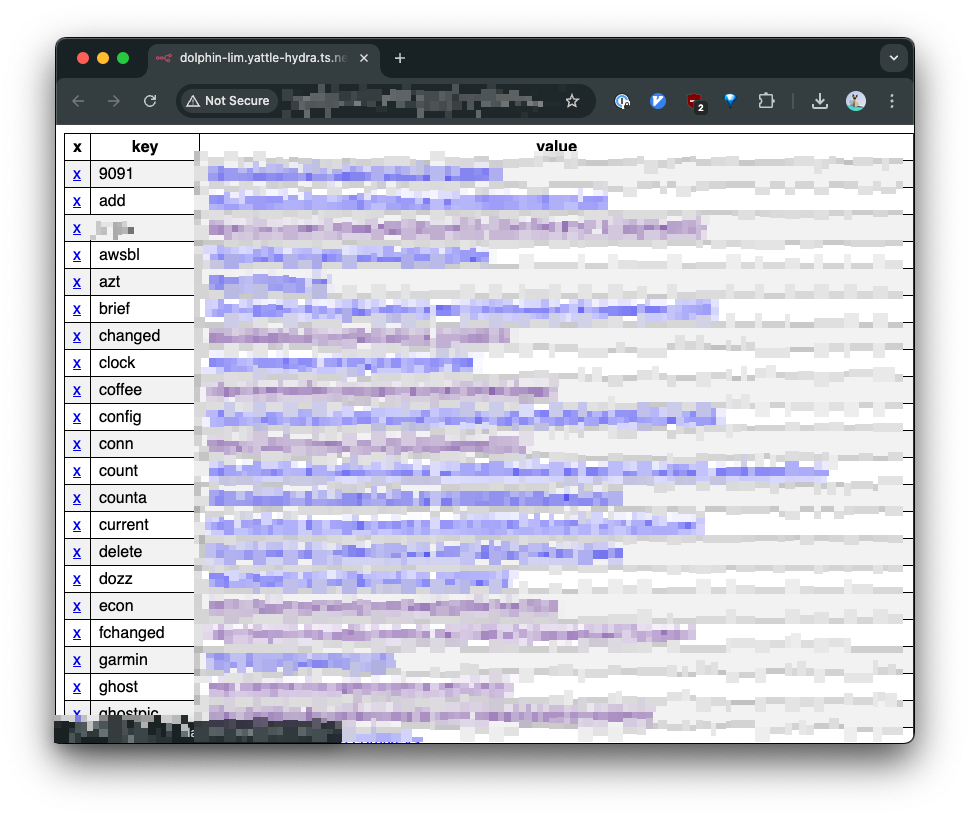

- URL shortener by linking GET requests, HTTP redirects and redis

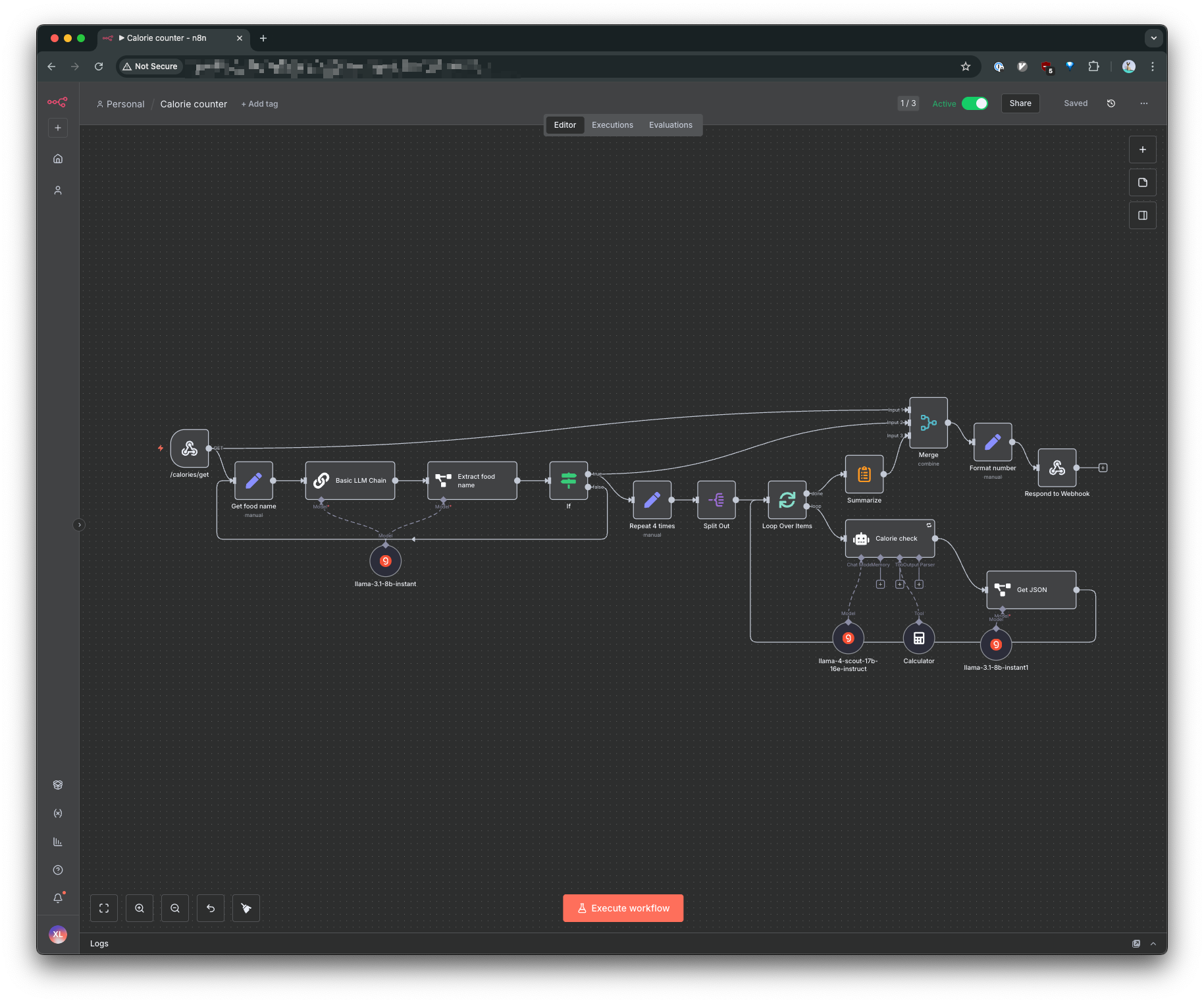

- LLM driven calorie counter

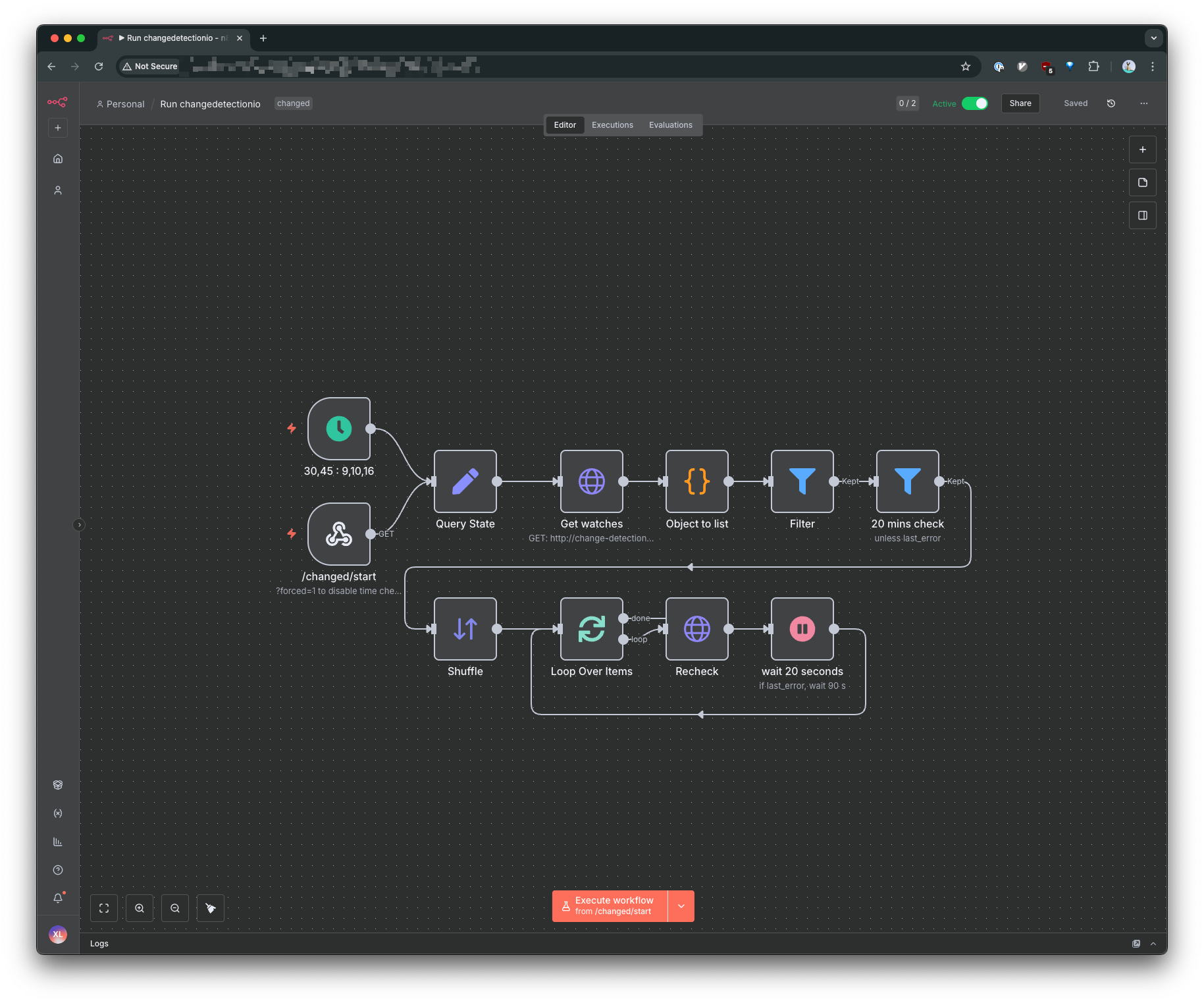

- Controlling website change detection runs over cron jobs and webhooks

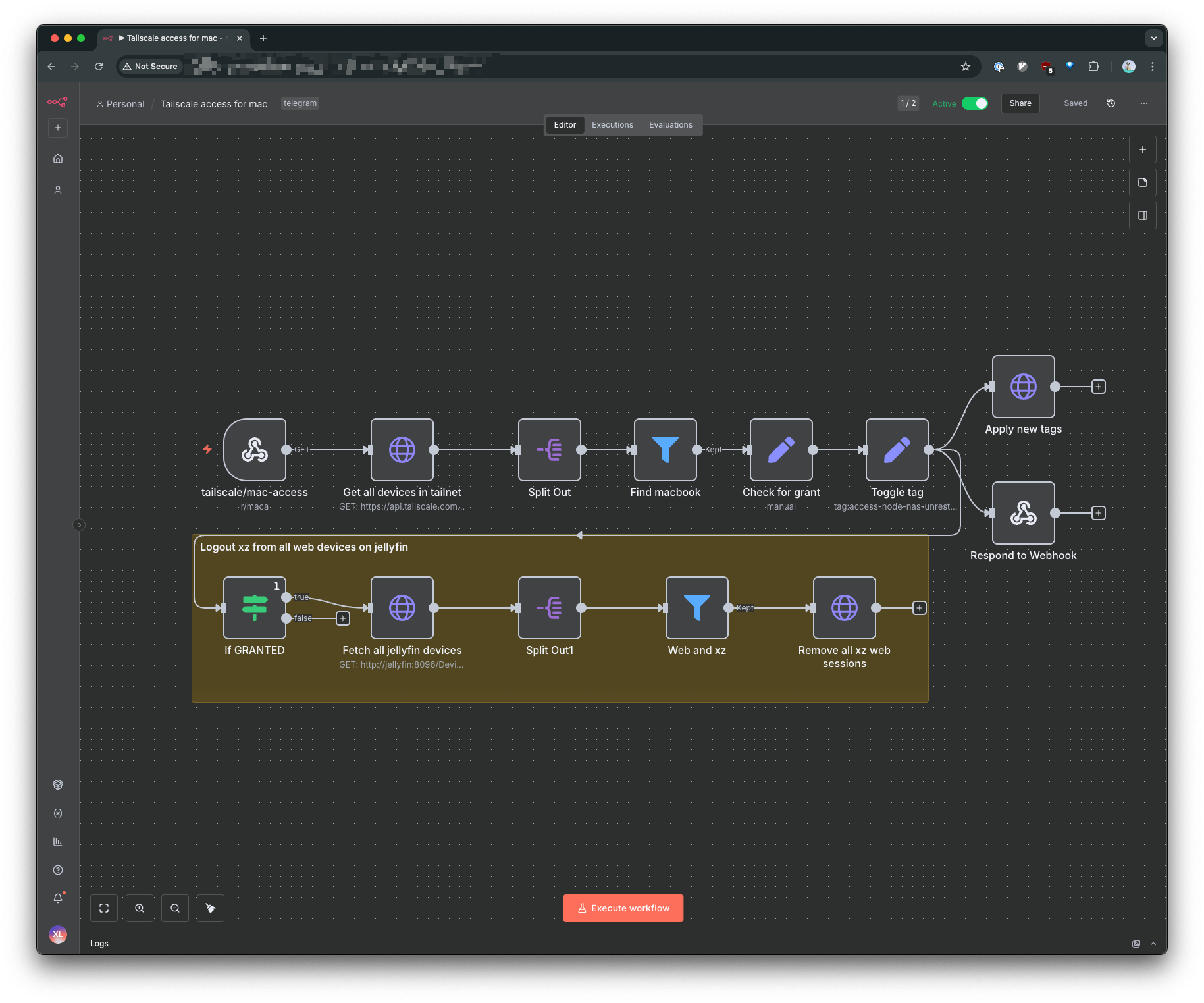

- Toggling my laptop's access to jellyfin and manga servers over the Tailscale API

- A daily briefing blog to overcome doom scrolling, powered by RSS and Ghost

- Routine imports of links from my raindrop account into my linkding reading inbox

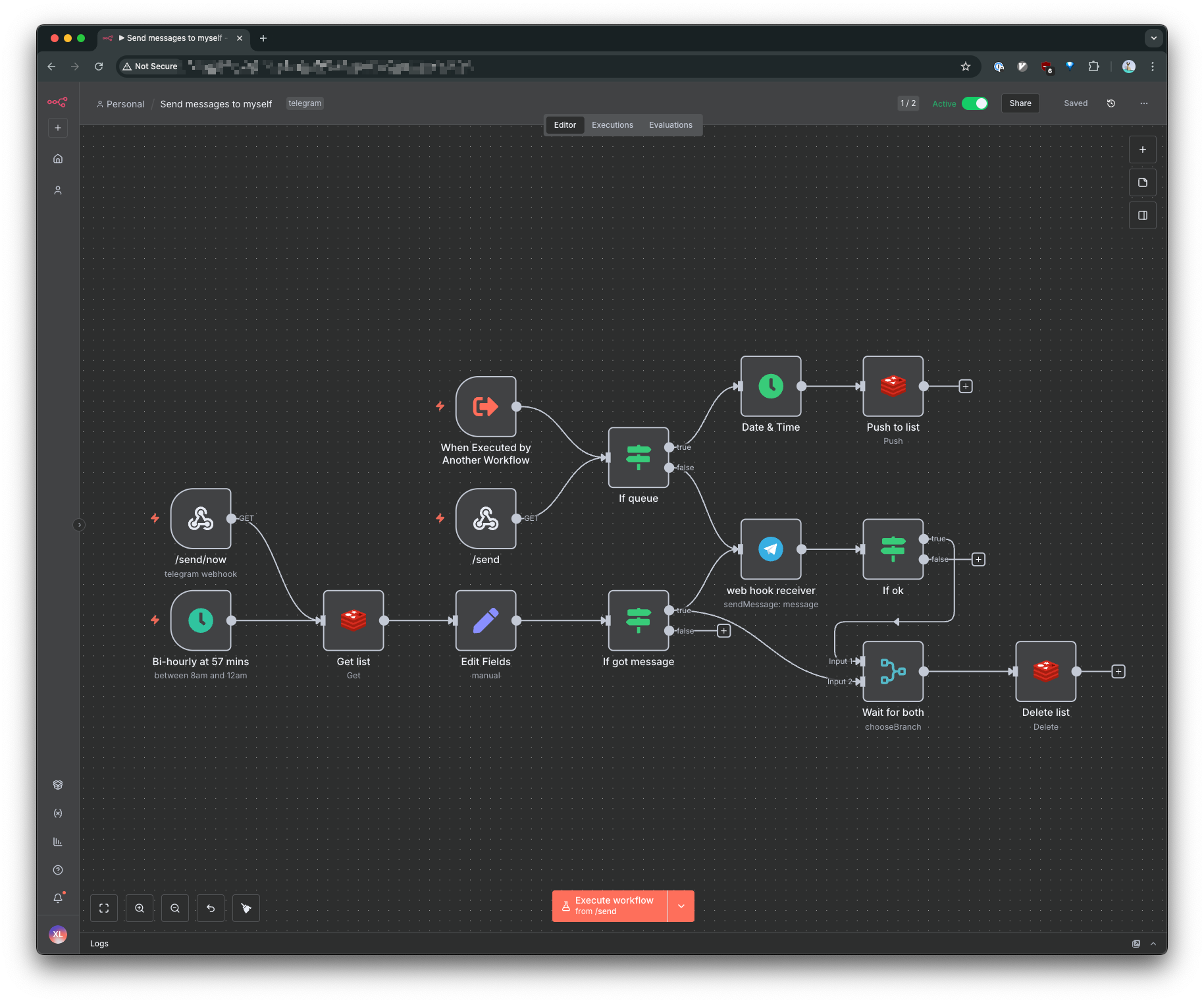

- A universal telegram notification endpoint, built with webhooks and the Telegram node

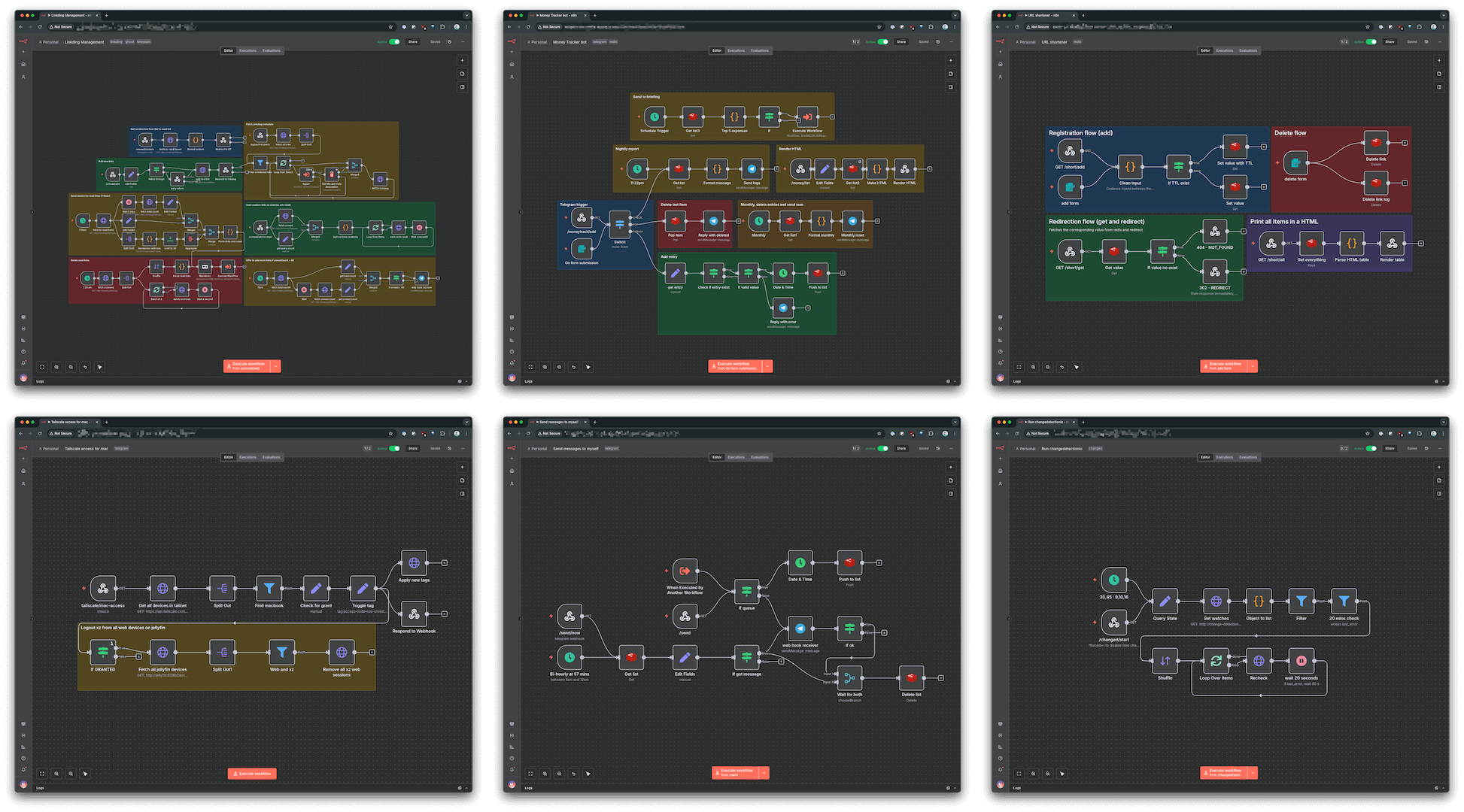

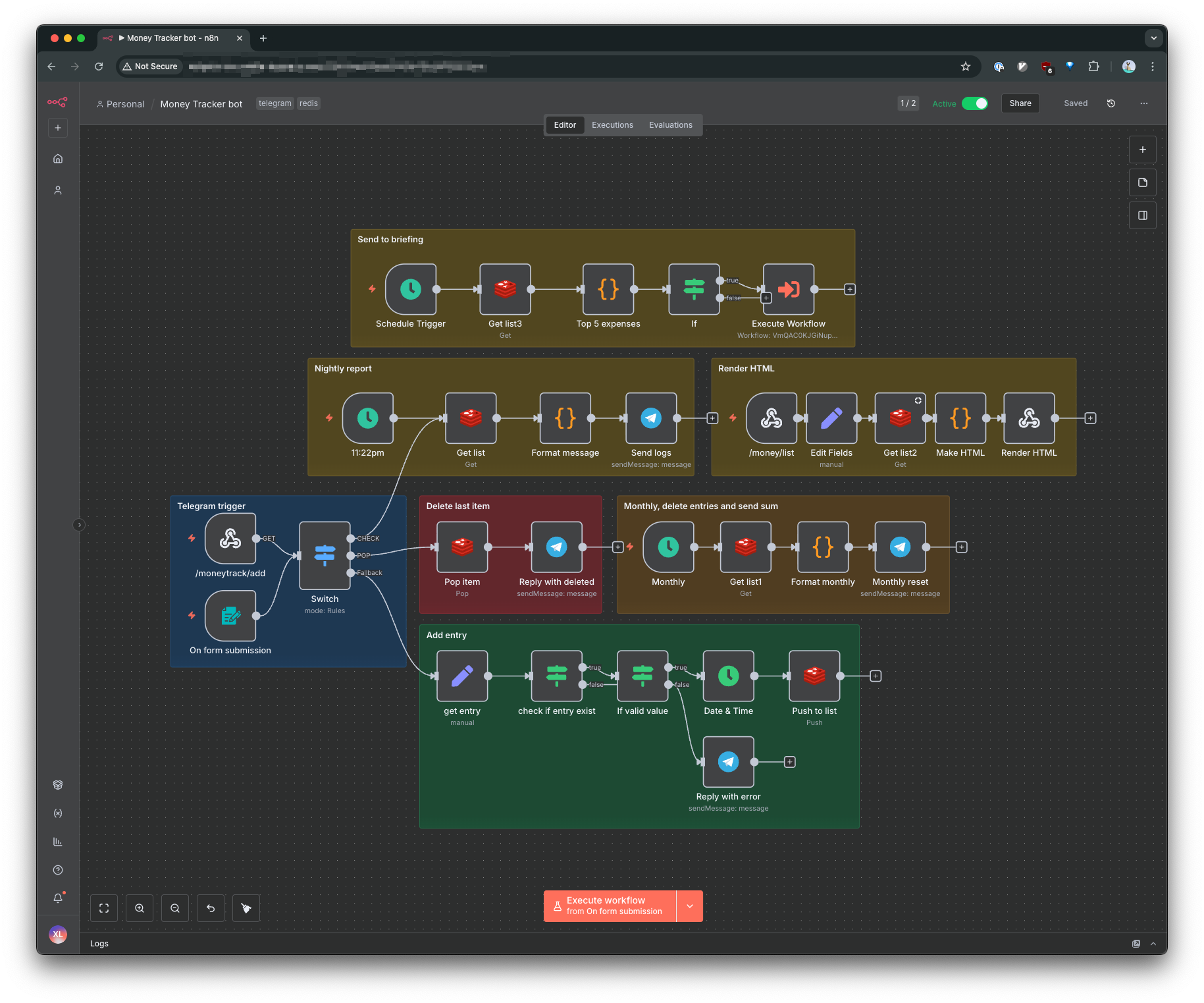

My collection of automations built over the years.

Tips from Half a Decade with n8n

Host it on docker. My n8n instance runs off a compose file with all of the relevent services in the same bridge network. I used to host it on a VPS that costs next to nothing and that is still a solid recommendation (as do Fireship's sponsor).

Hide it behind a VPN. I run my n8n instance on my NAS at home and connect to it over Tailscale VPN. This is an great pattern since I am too lazy to invest and implement a full zero trust setup, instead I just rely on the Tailscale's Access Control List to manage access. Its not great since its all or nothing, but I am lazy.

Disabling UI and API. To avoid excessive tinkering, you can disable the n8n editor UI and the API via a docker config. I am not sure if this reduces attack vectors in any meaningful way.

Use S3 services rather than the file system for temporary files. Assuming you followed my advice to run n8n via docker, you can choose to use the file system to store files. This is not a great idea. You have to deal with messy docker volume binds and file system read/write permissions. It also doesn't clean up after itself neatly and you have to be intentional for separating directories for different workflows. Personally, I am using a self-hosted MinIO instance and connecting it via the S3 node (btw, n8n has two versions of this, one for connecting to the actual AWS S3 service, and one for S3 compatible services like MinIO and I assume Cloudflare R2). When (not if) a job fails halfway, I can easily clean up the files by deleting a prefix. Different workflows can also use their own little buckets to avoid accidentally touching each other. Still, this is a tip rather than a hard rule.

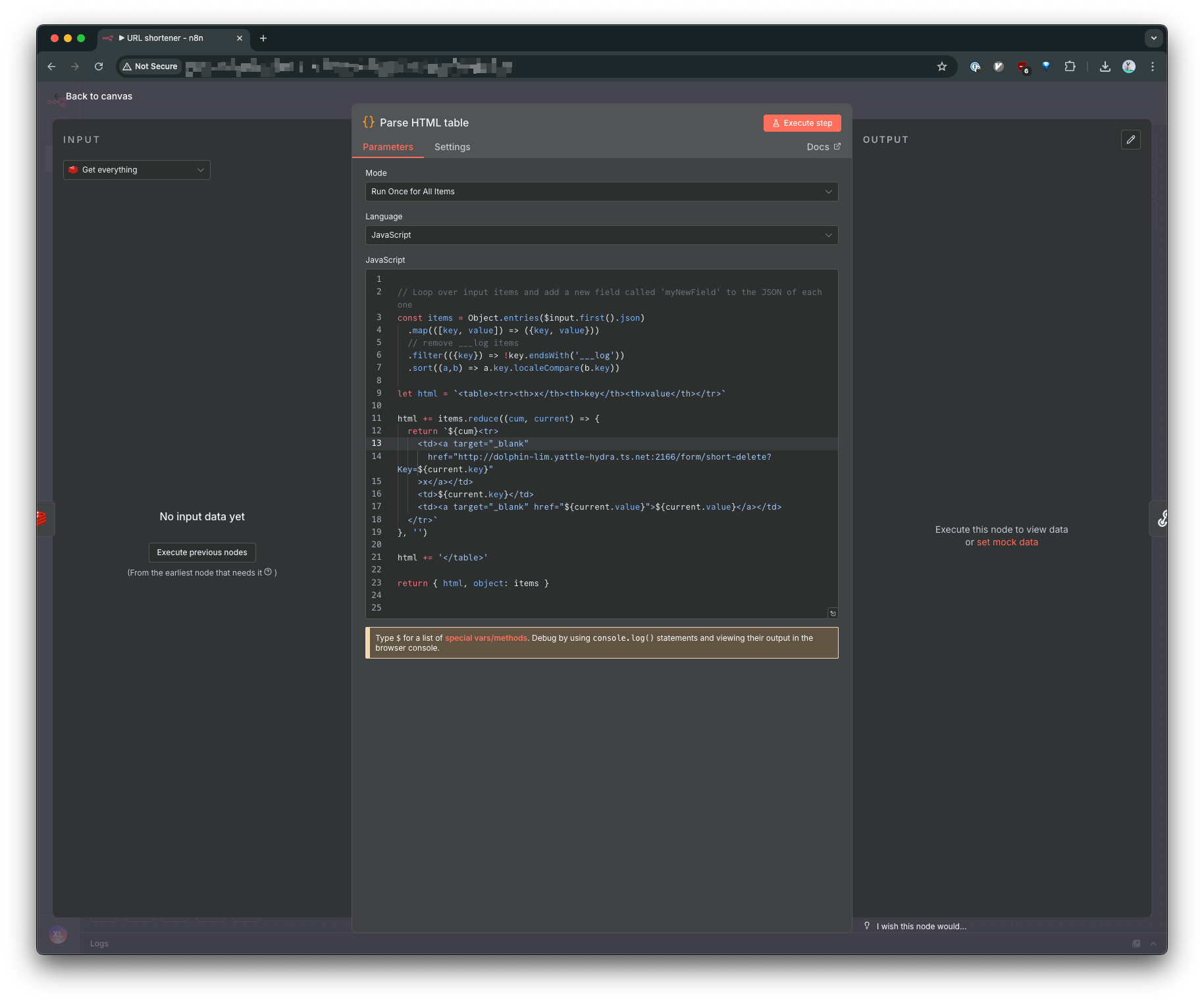

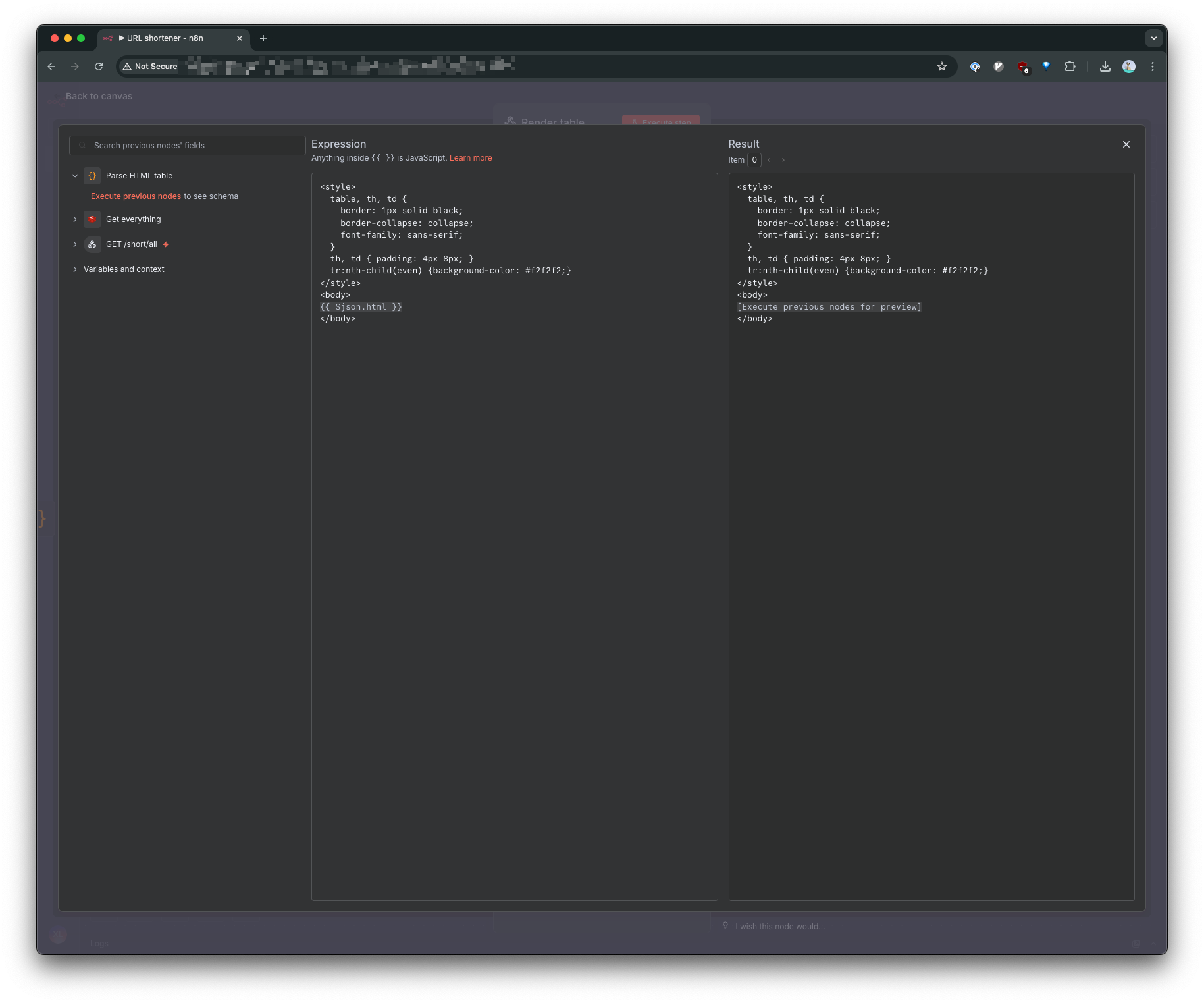

You CAN render HTML pages. n8n provides a HTML template node, but I don't really use it. I just use the Code node to render a bunch of HTML and inject it into a prewritten response.

I render the items as a bunch of <li> and inject it into a string as a "HTML" file.

ChatGPT was only used to correct grammatical errors, otherwise no AI was used in the writing of this article.