No Algo, a feed to beat doom scrolling with a bit of AI

Everywhere we go, every time we have a spare minute, we pull out our phones and doomscroll. We convince ourselves it's productive: keeping up with peers on Linkedln, following events on Twitter and Reddit, exploring hobbies and new cafés on Instagram and TikTok. At least, that's what we tell ourselves until we realize we've spent hours lying on the couch on a cool weekend afternoon, consuming brain-rot content for the third hour in a row.

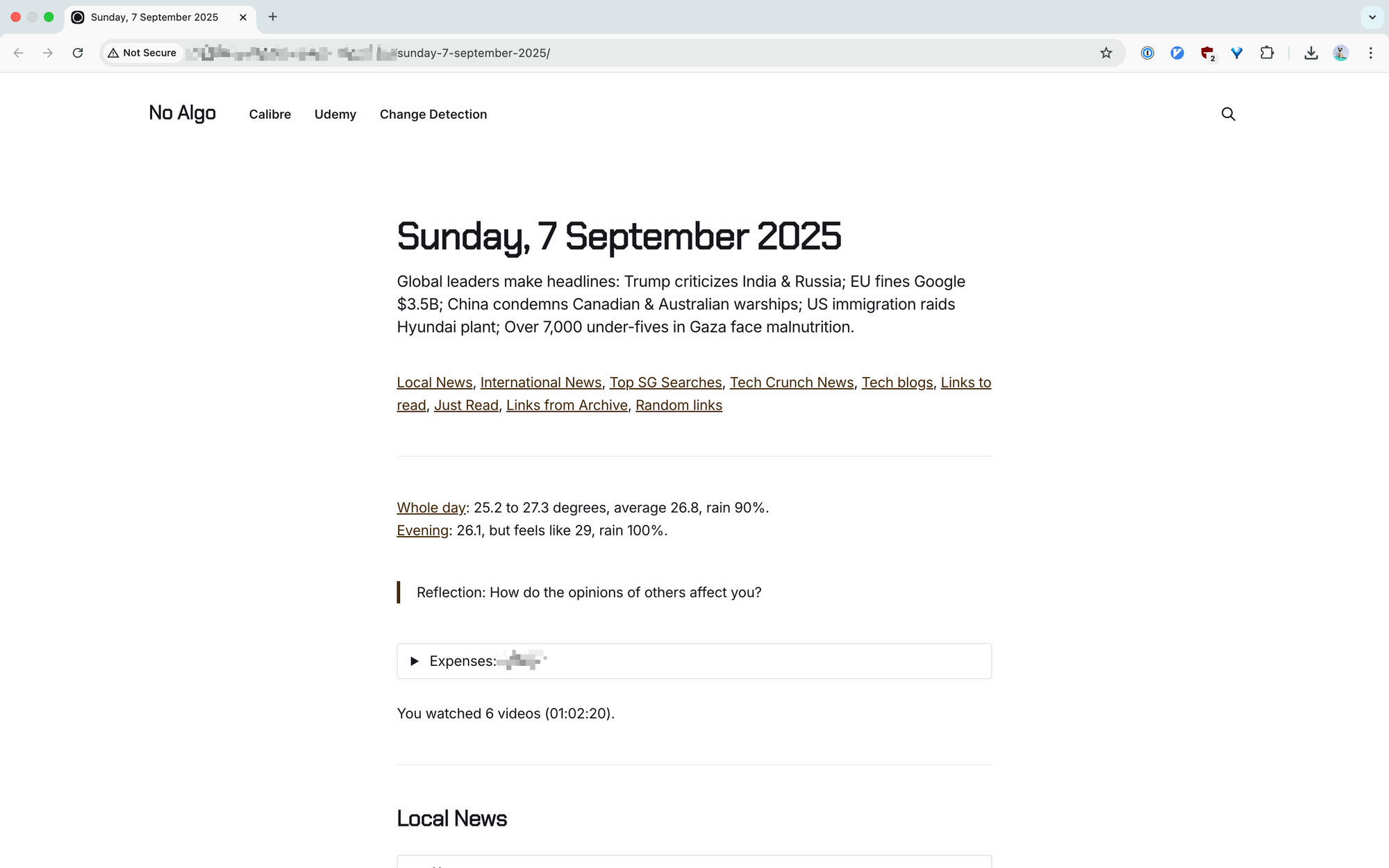

What l'm going to show you is a system I built to (attempt to) curb my doomscrolling. It isn't perfect since I still doomscroll, but it adds value to my routine and nudges me toward expanding my mind rather than sinking into yet another algorithmic feed. I named it "No Algo" , because every day, I choose it over an algorithm. Here's what it looks like today.

Automation, AI, RSS

Months ago, I wrote about the joy of making small tools. I shared how I use a self-hosted instance of n8n to glue different homelab services together, using it to manage lifecycles of my links archive, setting a cron job for Change Detection, that sort of thing.

A little over three months ago, I started a local blog in my homelab. I've been tinkering with it, using it to curate an array of reading materials for me to consume daily.

My day in a view

Every morning, I get a link over Telegram that opens this page. An AI summary of all titles from all my RSS feeds cramped into less than 300 characters. A programmatically generated table (list?) of contents, brief stats of my previous day and pertinent information of my day ahead.

How I use it: Every morning, I will review the summary and adjust my evening workout plans with the forecast. If I am commuting that morning, I will do a bit of reflection based on the prompt and check out my top 5 expenses behind that accordion.

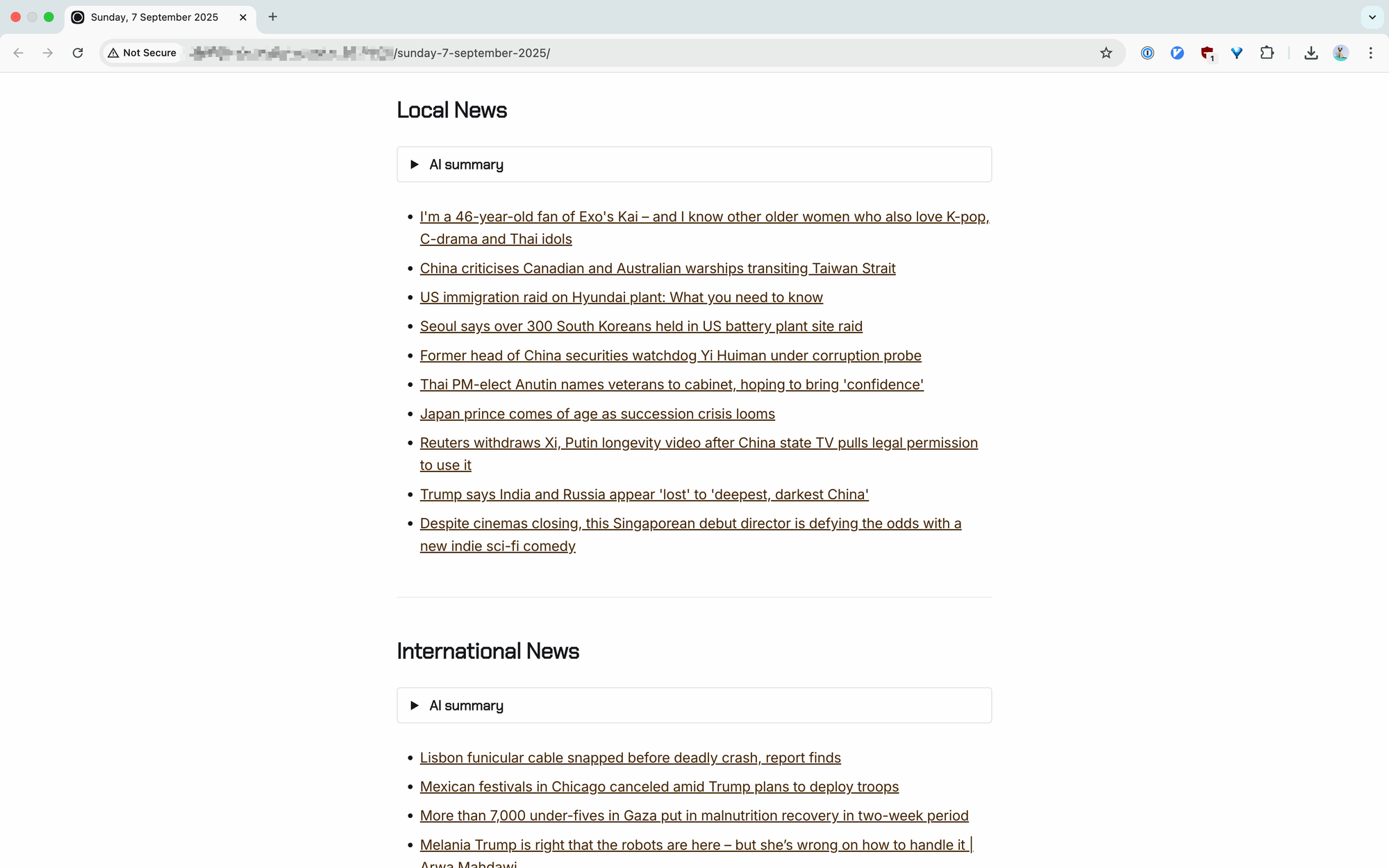

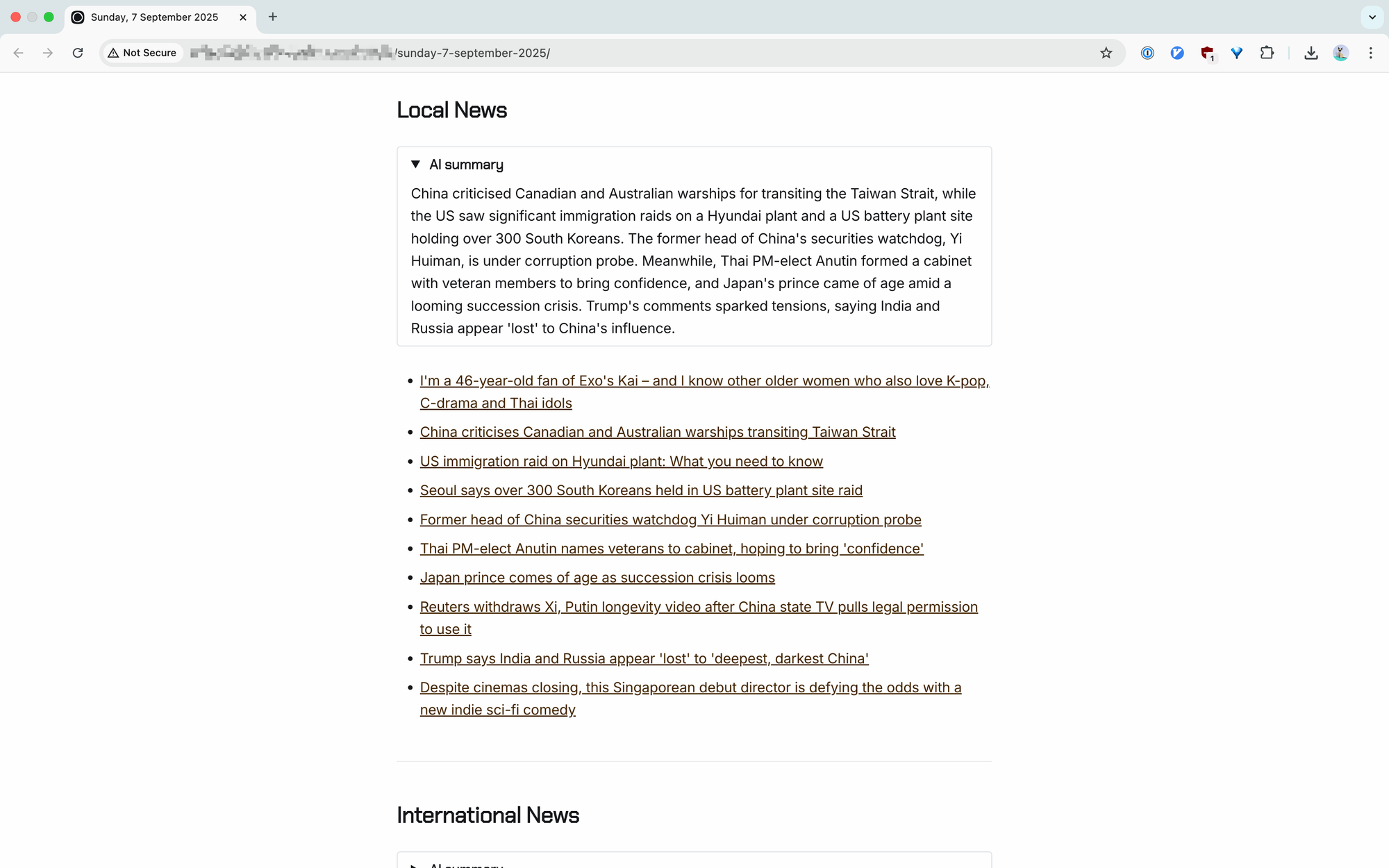

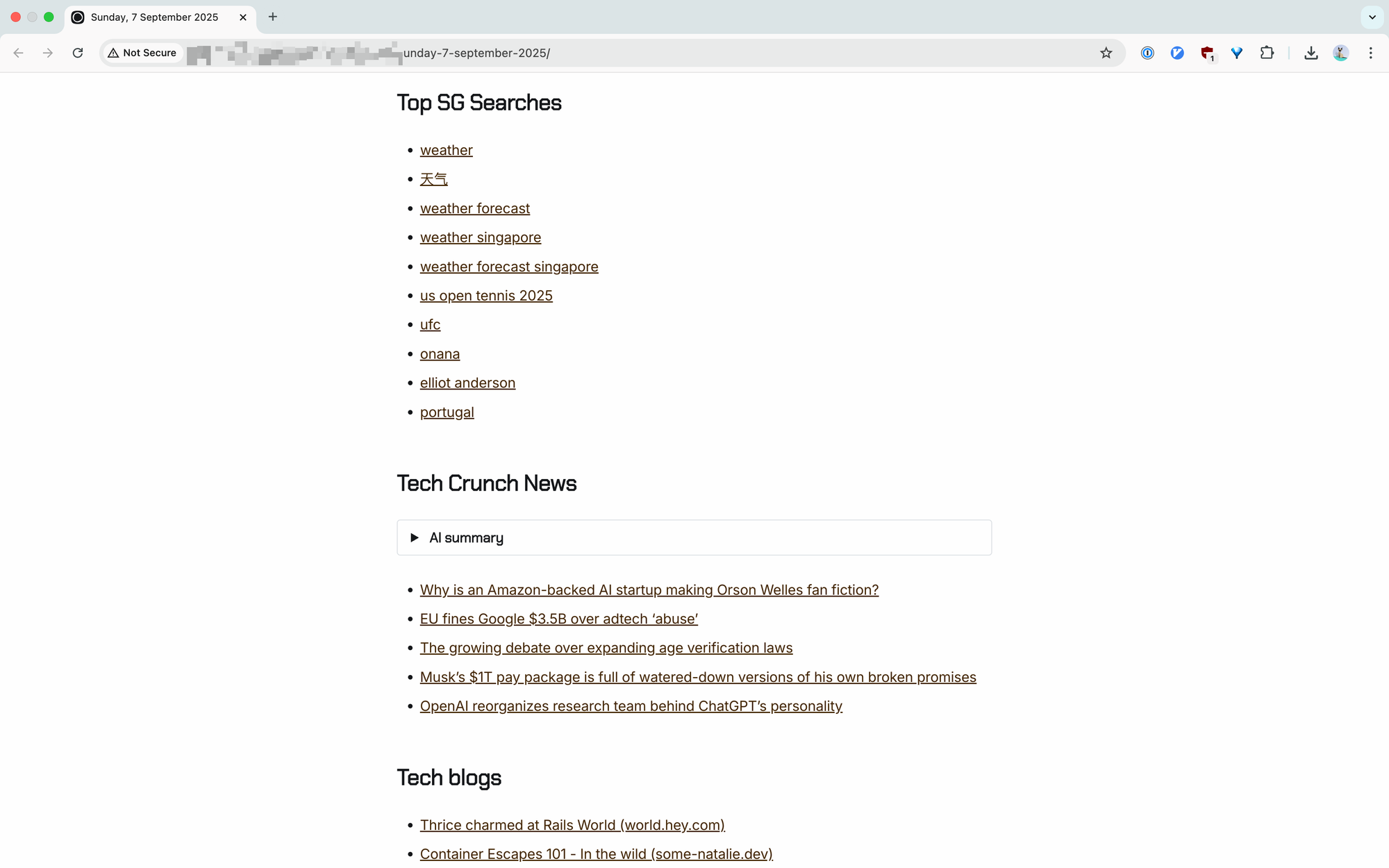

News from RSS

I get my news from RSS feeds from a few sources. Channel News Asia for local news, The Guardian for international news, TechCrunch, top Singapore Google searches and a privately curated feed of various tech bloggers and publications I collected over the years. Some feeds have an AI summary hidden behind an accordion for busy mornings where I don't have time to peruse the lists of articles.

How I use it: As part of my morning routine, I will open all articles that interest me in a new tab in one go and browse them while I am making coffee or commuting. If its a long article, I will add them to my self-hosted links inbox.

Jogging my memory

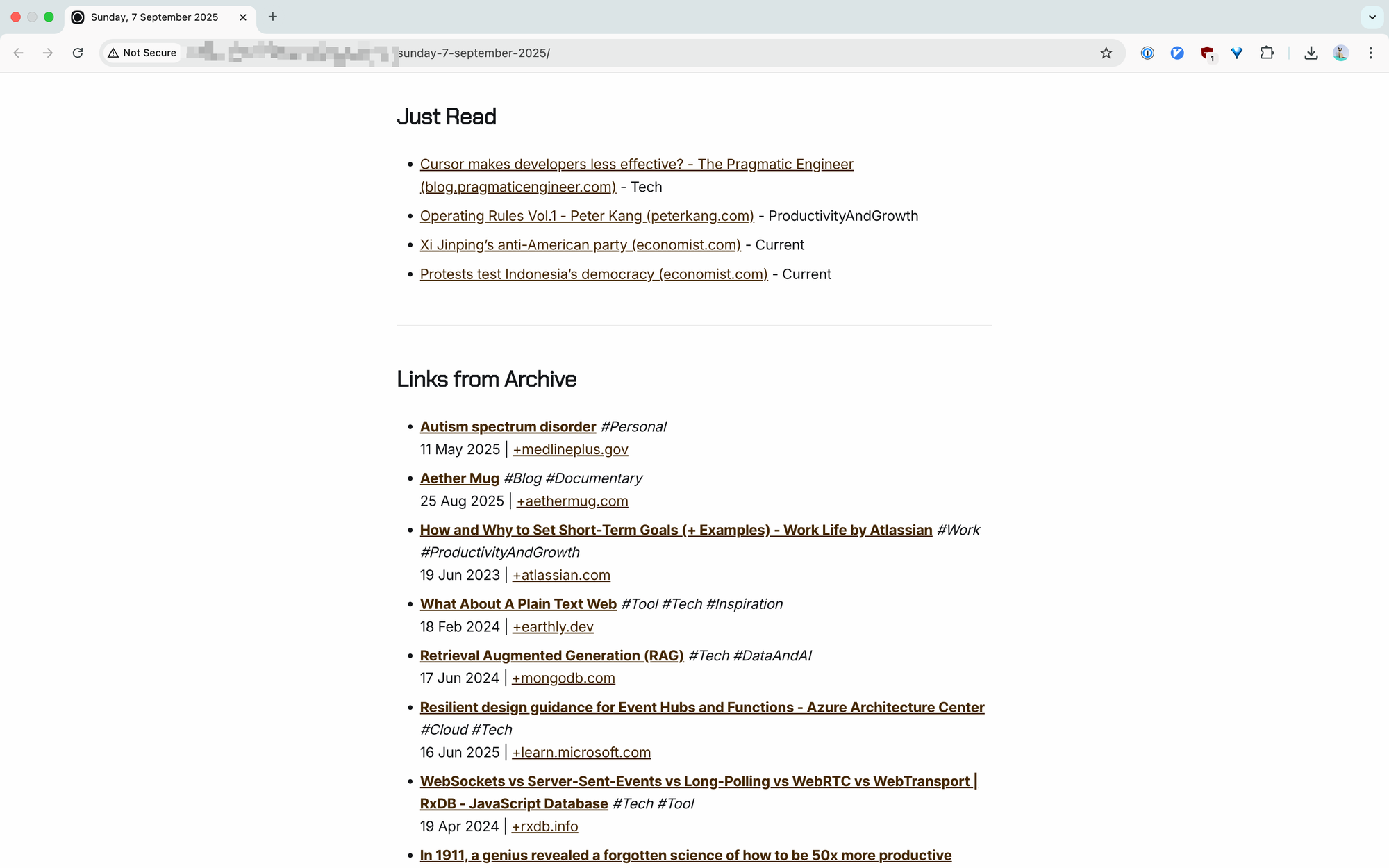

As part of my links archival lifecycle flow, n8n will fetch all read link from the previous day, sends them to this blog under the "Just Read" section and deletes them from the list.

And to justify my links hoarding on Raindrop.io (where I currently have 900+ saved links), I also call their API to fetch a dozen random links, and add them to the daily post.

How I use it: For "Just Read", I go through every title, try to recall an idea from the article before moving onto the next. And for my "Links from Archive", I will add any old links that are relevant to my current projects back into Linkding.

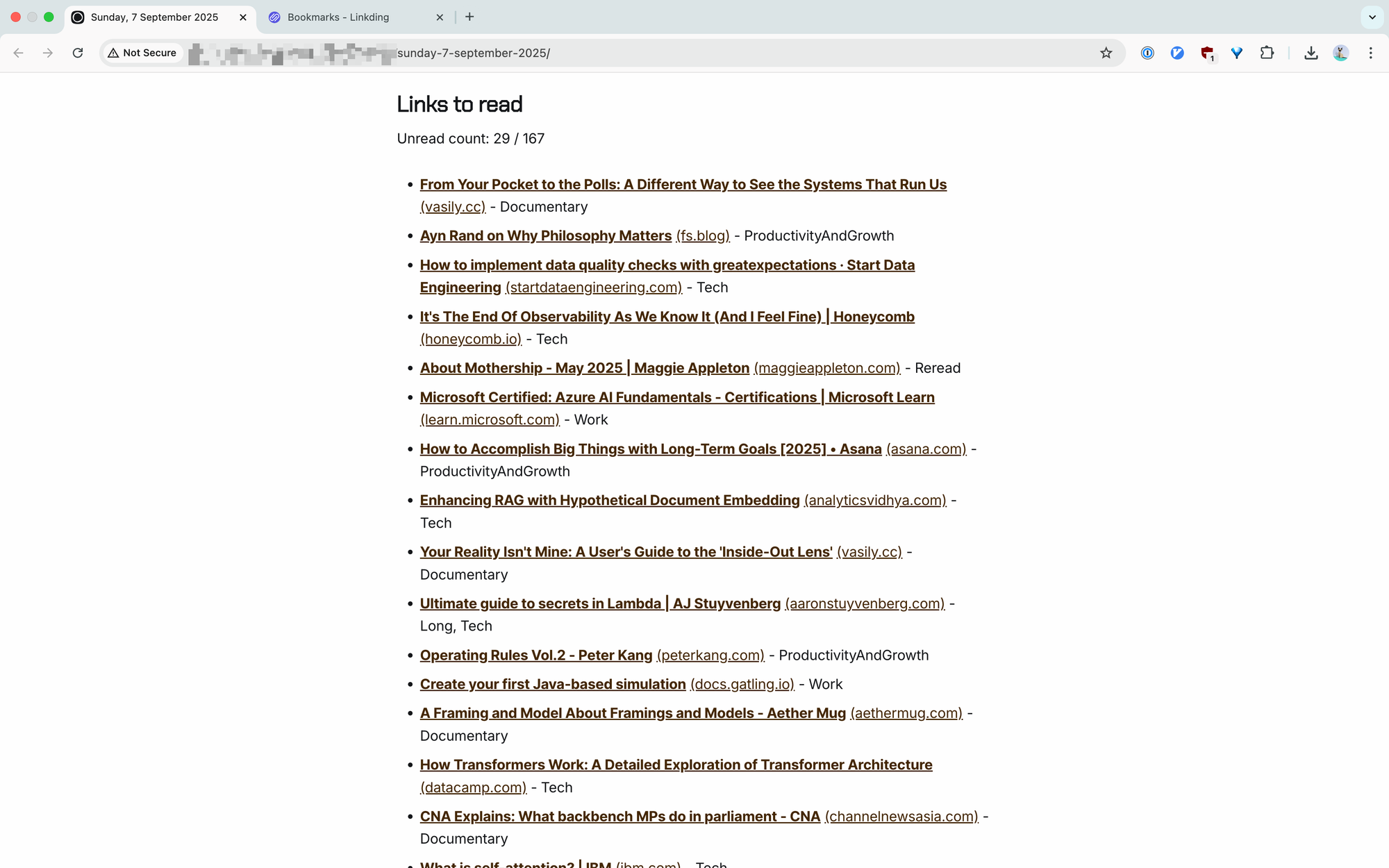

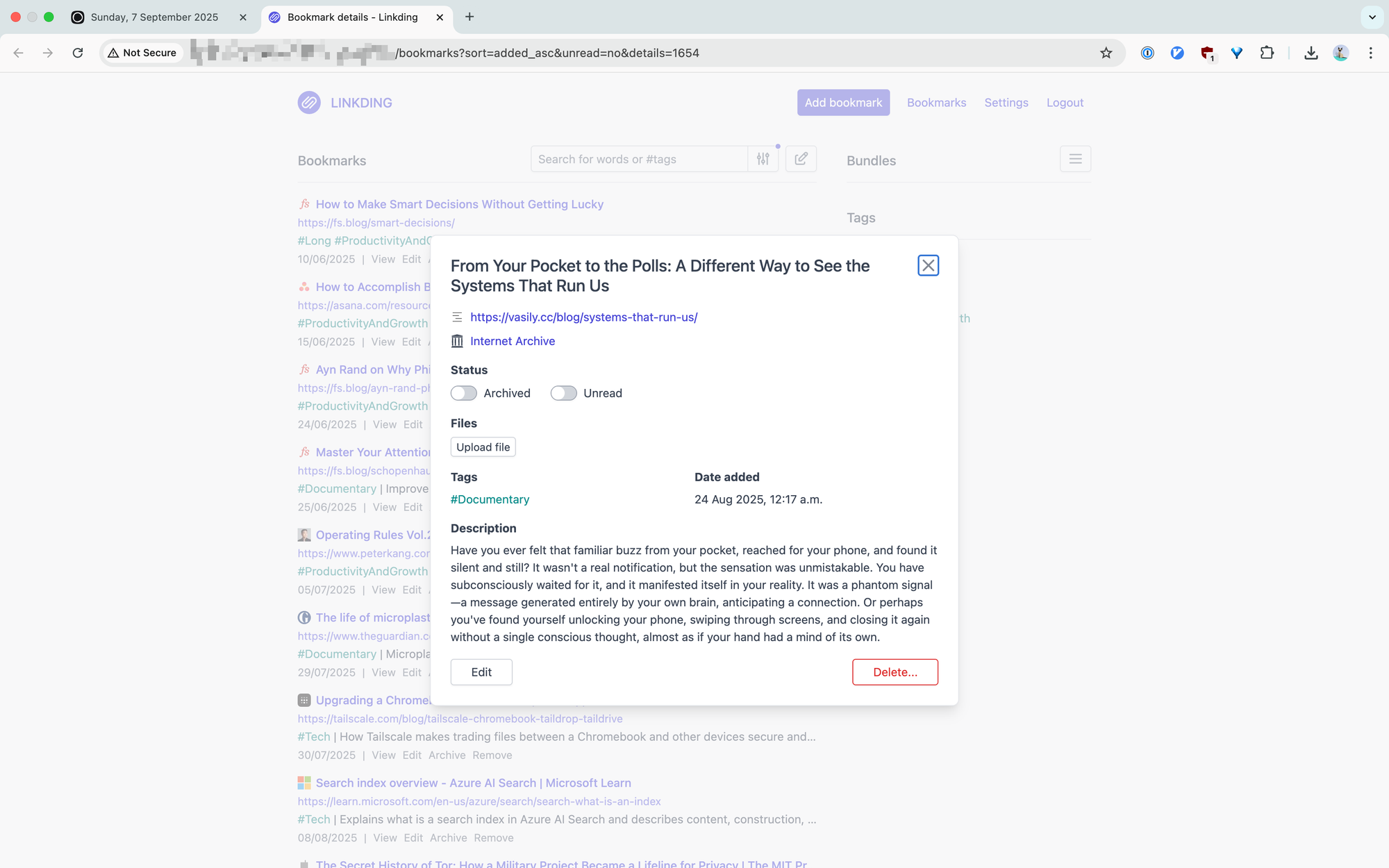

The point of it all is to have links to read.

This is the pièce de résistance of No Algo. While I go about my life, I will collect links from newsletters, friends, at work. They all go through my links inbox, Linkding. But what's the use of them just sitting there, in a SQLite database, never seeing the light.

The point of No Algo is to reduce friction in reading links, making it give algorithm feeds a run for their money.

How I use it: Pretty simple. I click on a link, it brings up a Linkding view of the item, and I mark it as read when I am done. If I like it, I add it to Raindrop. Regardless, the link will be deleted and sent to the next "Just Read" section the next morning.

And this is how I keep up with reading my links.

The automation that ties it all together

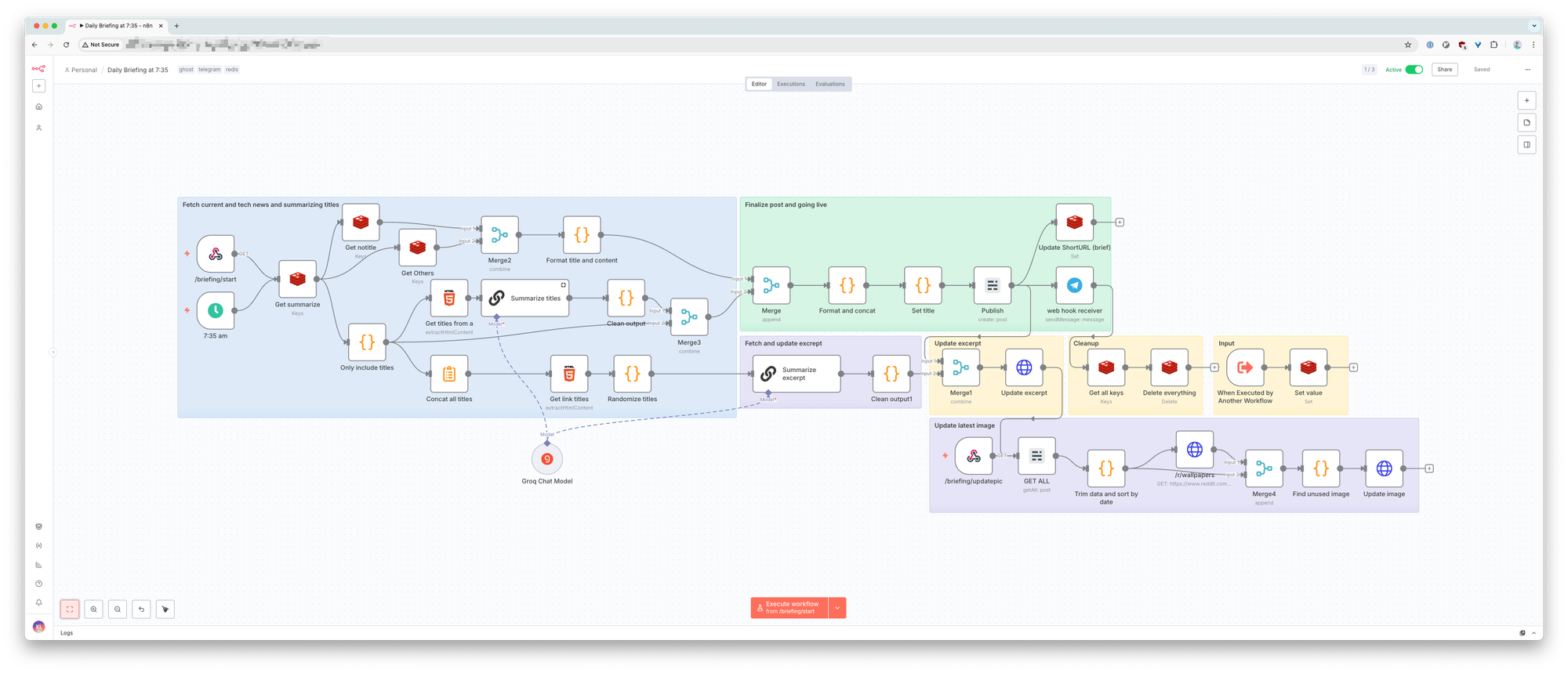

Every morning, I will have automations running to collect items from RSS, Jellyfin, Linkding, and various APIs. They will be parsed in their own workflows and deposited as markdown strings into Redis.

Next, the "Daily Briefing" workflow will run, collecting the markdown strings and parses them into HTML templates. The AI summarization happens at this stage. The HTML content will then be published on No Algo as a blog post. A random wallpaper from r/wallpapers will be fetched and used as the cover image, a telegram message with the link sent to me before the markdown content are deleted in Redis.

Fin

No Algo shows that we don't have to accept algorithmic feeds as the default. With a few simple automations, it's possible to build routines that serve us rather than distract us. Whether it's RSS, link curation, or just being deliberate about what we consume, a little structure can go a long way in taming doomscrolling.